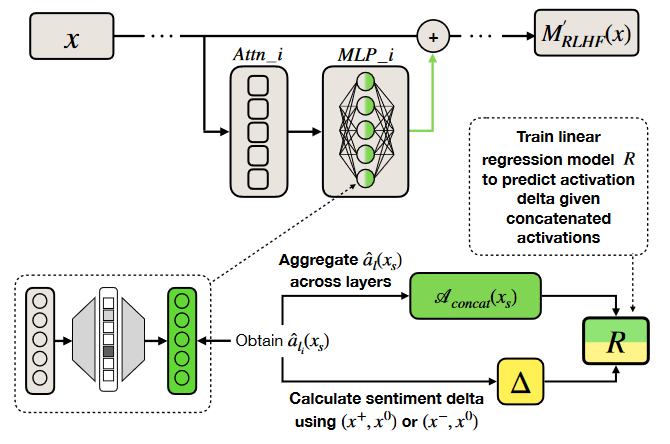

My contributions include leading research with the UK AI Security Institute on machine unlearning for AI safety and developing the N2G algorithm adopted by OpenAI to evaluate sparse autoencoders for interpretability. I also spearheaded the Alan Turing Institute's response to the UK House of Lords inquiry on large language models and worked with Anthropic's Alignment team (2024-2025) on studies investigating deception and reward hacking, among other topics.

My research is funded by OpenAI, Anthropic, Schmidt Sciences, Future of Life Institute, NVIDIA, among others. I'm affiliated with Cambridge's CSER, NTU's Digital Trust Centre, Edinburgh's Informatics, and am a member of ELLIS. Previously, I was a researcher at Amazon and Huawei, and Co-director and Head of Research at Apart Research.

I'm looking for motivated people interested in AI Safety, Interpretability, and Technical AI Governance. Before reaching out, please review my Research Agenda and tell me which aspects resonate with your interests or how your work fits into these directions. I value collaborative work and am especially committed to partnering with researchers from underrepresented, marginalized, and otherwise disadvantaged backgrounds. If this resonates with you, please contact me at fazl[at]robots[dot]ox[dot]ac[dot]uk