Fazl Barez

a form impregnating the barren line of time

a form conscious of an image

that returns from a feast in a mirror.

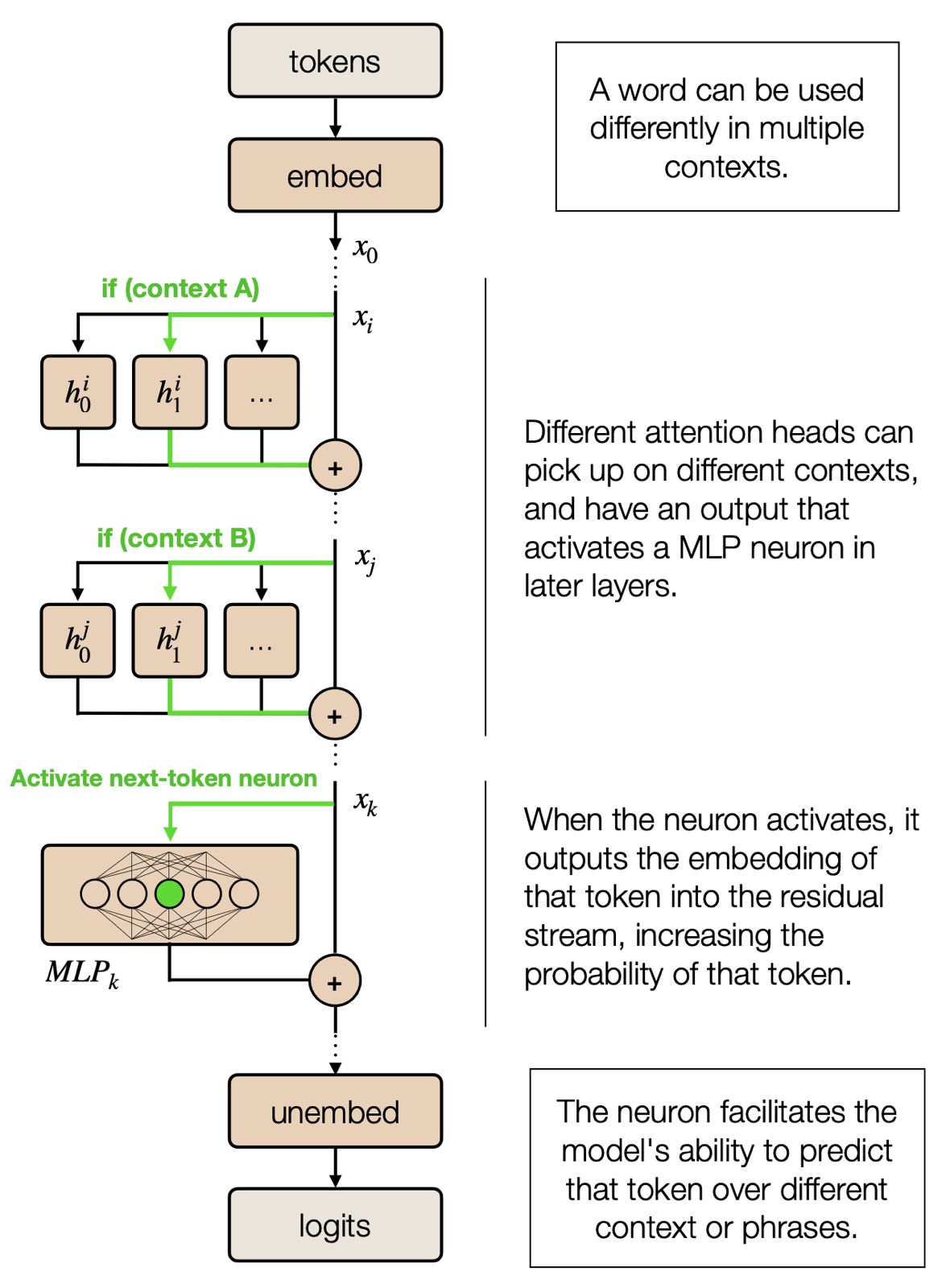

My work has focused on understanding what happens inside neural networks and using that understanding to make AI systems safer. As models grow more capable, I think the most urgent challenge in interpretability is moving from observation to action—building systems where we can trace a model's internal reasoning, verify it, and correct it when something is wrong. Specific directions I'm working on:

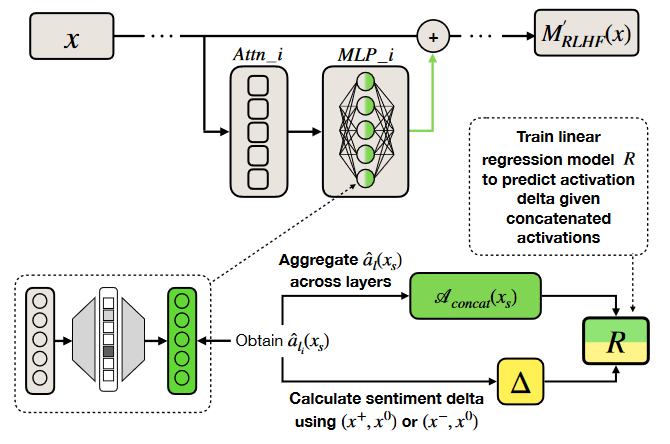

• When a model produces a surprising or harmful output, how can we trace the internal cause—automatically and at scale?

• How can we remove a dangerous capability from a model and be confident it won't come back?

• When a model shows its reasoning, how do we know it actually computed things that way—and how do we give regulators evidence?

Affiliations: Cambridge CSER, NTU Digital Trust Centre, Edinburgh Informatics, and ELLIS.

Beyond my core research, I'm drawn to neuroscience, psychology, philosophy of science, poetry, and literature.

I'm looking for motivated collaborators. If my Research Agenda speaks to your interests, I'd love to hear which directions excite you and how your work connects. I'm especially committed to partnering with researchers from underrepresented and disadvantaged backgrounds.